As Enterprises start to look at actively leveraging AI within their business to unlock efficiencies, enhance customer experiences, and gain a competitive edge, very quickly the question becomes – what approach should I take? The key question here of course is – What kind of AI architecture is right for us?

In this post, we’ll take a look at the three common architectures we are seeing in Enterprise trials:

- GPTs with prompt engineering,

- Retrieval-Augmented Generation (RAG), and

- Fine-tuning models.

This post will explore how each works, their practical applications, and why enterprises should consider them. Finally, it will compare the strengths and weaknesses, so you can consider what the right approach is based on your organization’s AI maturity.

1. GPTs with Prompt Engineering: The Entry-Level Option

How It Works

GPT models are large, general-purpose AI systems pre-trained on vast datasets. Everyone by now is familiar with Open AIs models via ChatGPT, but there are lots out there including Phi, Llama, Mistral etc, depending on your needs. Enterprises can leverage these models without significant additional by using prompt engineering. This involves crafting specific prompts and system messages to shape how the model responds to user queries.

System prompts set the tone and behavior of the model, while user prompts guide specific interactions.

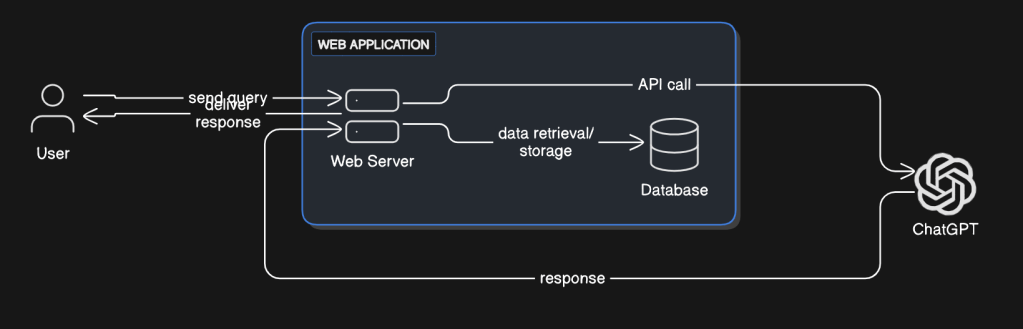

Example Architecture:

The power of this is how simple it is although that does come with limitations in how much control you have. The Application Architecture here is a simple two tier app/db, with the web server managing the outbound calls to the LLM. This enables the enterprise to have control over the chat context, by being able to add System Prompts to control the conversation. This is something you cant do with free form conversations like the default CoPilot on your windows machine for example.

- System prompt: ‘You are a financial advisor assisting clients with investment strategies. Only answer questions about investment strategies, respond ‘Thats not in my skills, do you have a financial question?”

- User prompt: ‘What’s a good investment strategy for someone saving for retirement?’

The GPT generates responses based solely on the System Prompt instructions.

Why It’s Useful

- Quick Setup: There’s no need for training or additional infrastructure. Enterprises can start using GPT models immediately (small effort to understand the conversation flow and prompts for engineers, but we are talking days not weeks).

- Wide Applications: Works well for general-purpose tasks like content creation, customer support, and brainstorming.

- Cost-Efficient: Since there’s no customization, the only costs are related to API usage. This could even be further controlled by hosting your own LLM if the volumes justify it.

Best For

- Enterprises new to AI.

- General-purpose tasks where pre-trained knowledge is sufficient.

- Scenarios where domain-specific data isn’t required.

2. Retrieval-Augmented Generation (RAG): Adding Context with Internal Data

How It Works

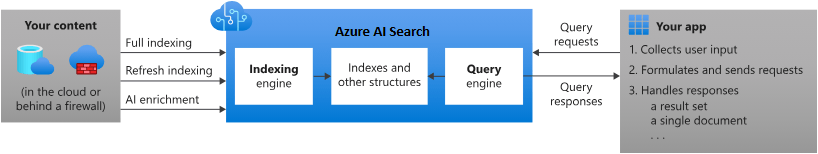

RAG combines a pre-trained GPT model with a retrieval mechanism. Instead of relying solely on the model’s internal knowledge, RAG enables enterprises to supplement it with their own data stored in a knowledge base, such as a database or document repository like SharePoint.

When a user queries the system, relevant documents are retrieved using embeddings and semantic search. These documents are then fed into the model along with the query to generate contextually accurate responses. This is important as it increases the amount of tokens sent back and forth and can increase API cost significantly, so focus on the embeddings and search is crucial to ensuring it is cost effective.

Note: the first approach using System Prompts is actually foundational to how we control conversations, so this and the third option would still use it.

Example Architecture:

- A user asks, ‘What are the leave policies for employees?’

- The retrieval system fetches the relevant HR policy document from a SharePoint site.

- The GPT model reads the document and generates a concise response based on its content.

Why It’s Useful

- Domain-Specific Answers: Enterprises can leverage their proprietary data to provide accurate and up-to-date responses.

- Scalability: Works for use cases involving large repositories of internal knowledge, such as policy manuals or customer FAQs.

- Reduced Hallucination: Since the model relies on factual data from retrieval, there’s less risk of generating incorrect information.

Best For

- Enterprises with significant, accurate and well structured internal knowledge bases.

- Use cases requiring accurate, domain-specific responses (e.g., legal, HR, technical support).

- Organizations with moderate AI maturity that want to enhance GPT capabilities without full model customization.

3. Fine-Tuning: Advanced Customization for Specific Needs

How It Works

Fine-tuning involves training a GPT model on a specific dataset provided by the enterprise. This adjusts the model’s parameters, creating a customized version tailored to the organization’s needs. Once fine-tuned, the model can respond directly to queries based on its new training.

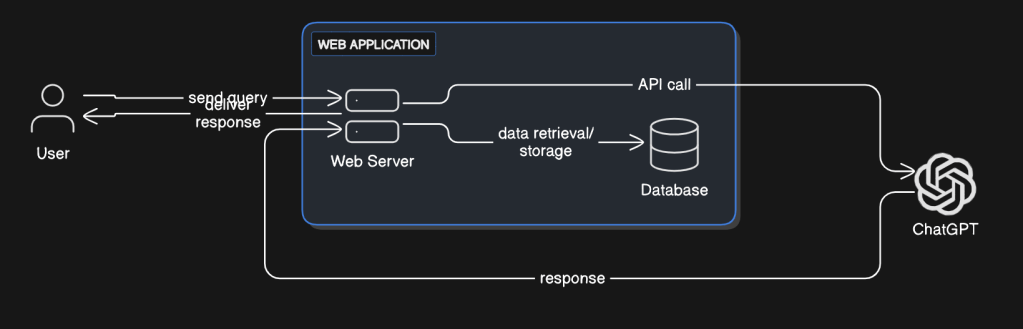

Example Architecture:

Ive provided the same diagram as above because the benefit of a fine tuned model is that the model itself has innate knowledge and can be integrated with directly. How you train them arguably should have its own diagram, which I will add when I get a chance.

- An enterprise fine-tunes a GPT model on a dataset of customer support transcripts.

- When a user asks, ‘How do I reset my password?’, the model draws on its training to provide a precise and personalized response based on the company’s policies and tone.

Why It’s Useful

- Highly Specialized: Fine-tuned models excel at handling niche use cases or complex tasks unique to an enterprise.

- Consistency: Ensures the AI aligns perfectly with brand voice, industry requirements, and organizational workflows.

- Independence from Retrieval: No need to manage a separate retrieval system once the model is fine-tuned.

Best For

- Enterprises with advanced AI maturity.

- Specialized use cases requiring a high degree of customization.

- Industries like healthcare, finance, or legal where responses need to follow strict guidelines.

Comparing the Three Architecture

At this point it is worth acknowledging, that GPT and fine Tuning can be argued to be an approach to AI rather than an architecture itself, but this post is about understanding what you would need in your organisation to be able to leverage it, from almost nothing, to lots of infra for training or computation etc.

| Feature | GPTs with Prompt Engineering | RAG (Retrieval-Augmented Generation) | Fine-Tuning |

|---|---|---|---|

| Ease of Setup | Very easy; no training required | Moderate; requires setting up retrieval | Complex; requires fine-tuning |

| Customization | Minimal | Moderate; relies on external data | High; fully customized |

| Data Dependency | None | Relies on internal knowledge bases | Requires high-quality training data |

| Cost | Low | Moderate | High (training + deployment) |

| Accuracy for Specific Tasks | General-purpose | High for domain-specific queries | Very high for custom use cases |

| Scalability | High | High | Limited by training and retraining costs |

| Maintenance Effort | Low | Moderate (maintain knowledge base) | High (retrain as data changes) |

| Best For | General-purpose use cases | Enhancing GPT with internal data | Niche or specialized use cases |

Conclusion: Choose What Fits Your AI Maturity

When it comes to AI architectures, there’s no one-size-fits-all solution. Each approach, GPTs with prompt engineering, RAG, and fine-tuning, offers distinct benefits depending on your enterprise’s needs and level of AI maturity:

- Start Small: If your enterprise is just starting with AI, GPTs with prompt engineering are a cost-effective, low-effort way to explore the technology.

- Enhance Context: For enterprises that need domain-specific answers, RAG offers a practical middle ground, combining general AI capabilities with tailored data access.

- Go Deep: If your use case requires advanced customization, fine-tuning is the way to go—but it’s best suited for organizations with the resources and expertise to manage it effectively.

By aligning your AI strategy with your organizational goals and data readiness, you can maximize the value of these architectures and pave the way for more intelligent, efficient workflows.